BLEURT is a metric for natural language generators boasting unprecedented accuracy

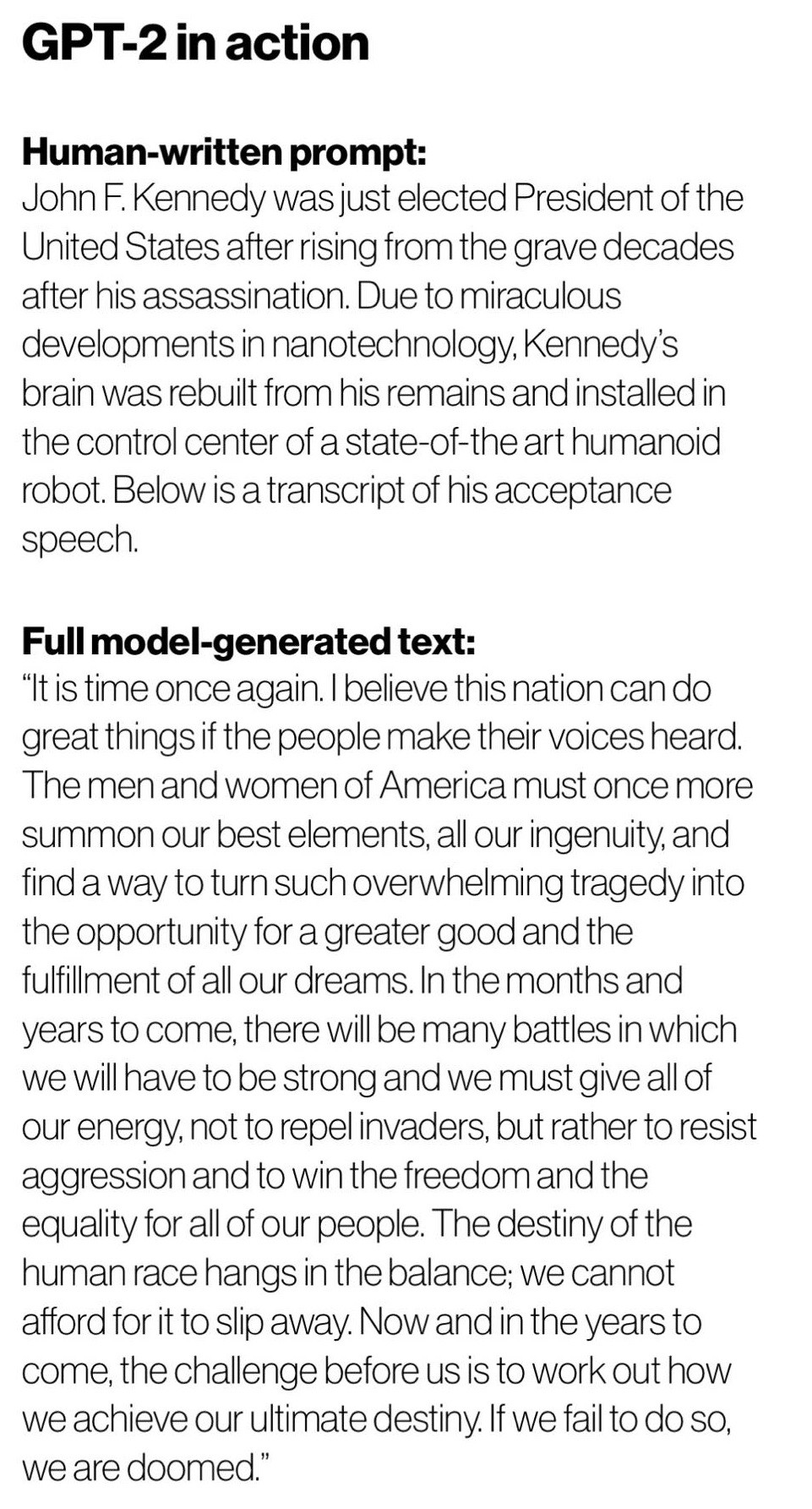

by Ather FawazNatural Language Generation (NLG) models have seen tremendous growth in the last few years. Their ability to summarize and complete text has improved significantly. So has their ability to engage in conversations. One example of this would be OpenAI's model that the company decided against releasing because it had the potential to spew fake news.

Given the rapid growth of NLG models, quantifying their performance has become an important research question. Generally, there are two ways to measure the performance of these models: human evaluation and automatic metrics like the bilingual evaluation understudy (BLEU). Both have their merits and demerits—the former is labor-intensive while the latter often pales in comparison to the former's accuracy.

Considering this, researchers at Google have developed BLEURT, a novel automatic metric for natural language models that delivers ratings that are robust and reach an unprecedented level of quality close to human metrics.

At the heart of BLEURT is machine learning. And for any machine learning model, perhaps the most important commodity is the data it trains on. However, the training data for an NLG performance metric is limited. Indeed, on the WMT Metrics Task dataset, which is currently the largest collection of human ratings, contains approximately 260,000 human ratings apropos the news domain only. If it were to be used as the sole training dataset, the WMT Metric Task dataset would lead to a loss of generality and robustness of the trained model.

To help deal with this issue, the researchers employed transfer learning. To begin with, the team used the contextual word representations of BERT since it has already been successfully incorporated into NLG metrics like YiSi and BERTscore. Following this, the researchers introduced a novel pre-training scheme to increase BLEURT's robustness and accuracy. Likewise, it also helped the model cope with quality drift.

BLEURT relies on “warming-up” the model using millions of synthetic sentence pairs before fine-tuning on human ratings. We generated training data by applying random perturbations to sentences from Wikipedia. Instead of collecting human ratings, we use a collection of metrics and models from the literature (including BLEU), which allows the number of training examples to be scaled up at very low cost.

The researchers pre-trained BLEURT twice. In the first phase, their objective was language modeling while in the second phase, their objective was evaluating the NLG models. After this, the team fine-tuned the model on the WMT Metrics dataset.

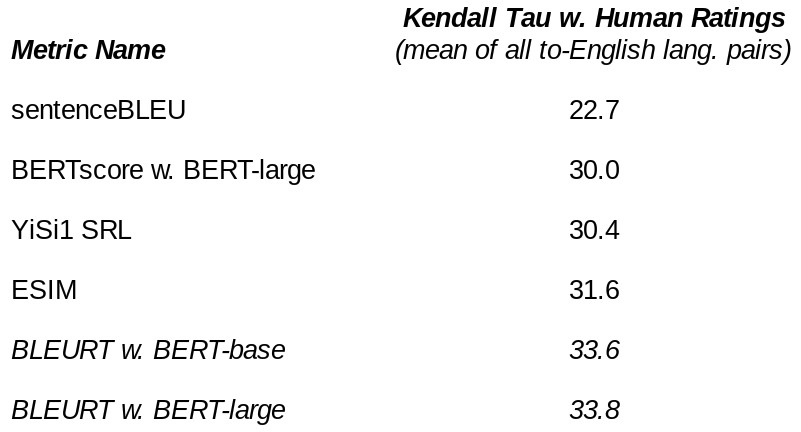

Once trained, BLEURT was pitted against competing approaches and was shown to be superior to current metrics. It also correlates well with human ratings. Concretely, BLEURT was shown to be approximately 48% more accurate than BLEU on the WMT Metrics Shared Task of 2019. Other results are summarized below.

BLEURT is an attempt to "capture NLG quality beyond surface overlap", the researchers wrote. The metric yields SOTA performance on two academic benchmarks. For the future, the team is investigating its potential in improving Google products and will be investigating multilinguality and multimodality in subsequent researches.

BLUERT runs in Python 3, relies on TensorFlow, and is available on GitHub. If you are interested in finding out more, you may study the paper published on arXiv.