How AI is stopping the next great flu before it starts

Machine learning can slash development times while saving millions on R&D.

by Andrew TarantolaImmune systems across the globe have been working overtime this winter as a devastating flu season has taken hold. More than 180,000 Americans have been hospitalized and 10,000 more have died in recent months, according to the CDC, while the coronavirus (now officially designated COVID-19) has spread across the globe at an alarming rate. Fears of a growing worldwide flu outbreak have even prompted the precautionary cancelling of MWC 2020 -- barely a week before it was slated to open in Barcelona. But in the near future, AI-augmented drug development could help produce vaccines and treatments fast enough to halt the spread of deadly viruses before they mutate into global pandemics.

Conventional methods for drug and vaccine development are wildly inefficient. Researchers can spend nearly a decade laboriously vetting candidate molecule after candidate molecule via intensive trial and error techniques. According to a 2019 study by the Tufts Center for the Study of Drug Development, developing a single drug treatment costs $2.6 billion on average -- more than double what it cost in 2003 -- with only around 12 percent entering clinical development ever gaining FDA approval.

"You always have the FDA," Dr. Eva-Maria Strauch, Assistant Professor of Pharmaceutical & Biomedical Sciences at University of Georgia, told Engadget. "The FDA really takes five to 10 years to approve a drug."

However, with the help of machine learning systems, biomedical researchers can essentially flip the trial-and-error methodology on its head. Instead of systematically trying each potential treatment manually, researchers can use an AI to sort through massive databases of candidate compounds and recommend the ones most likely to be effective.

"A lot of the questions that are really facing drug development teams are no longer the sorts of questions that people think that they can handle from just sorting through data in their heads," S. Joshua Swamidass, a computational biologist at Washington University, told The Scientist in 2019. "There's got to be some sort of systematic way of looking at large amounts of data . . . to answer questions and to get insight into how to do things."

For example, terbinafine is an oral antifungal medication that was marketed in 1996 as Lamifil, a treatment for thrush. However, within three years multiple people had reported adverse effects of taking the medication and by 2008, three people had died of liver toxicity and another 70 had been sickened. Doctors discovered that a metabolite of terbinafine (TBF-A) was the cause of the liver damage but at the time couldn't figure out how it was being produced in the body.

This metabolic pathway remained a mystery to the medical community for a decade until 2018 when Washington University graduate student Na Le Dang trained an AI on metabolic pathways and had the machine figure out the potential ways in which the liver could break down terbinafine into TBF-A. Turns out that creating the toxic metabolite is a two-step process, one that is far more difficult to identify experimentally but simple enough for an AI's powerful pattern recognition capabilities to spot.

In fact, more than 450 medicines have been pulled from the market in the past 50 years, many for causing liver toxicity like Lamifil did. Enough that the FDA launched the Tox21.gov website, an online database of molecules and their relative toxicity against various important human proteins. By training an AI on this dataset, researchers hope to more quickly determine whether a potential treatment will cause serious side effects or not.

"We've had a challenge in the past of essentially, 'Can you predict the toxicity of these compounds in advance?'" Sam Michael CIO for the National Center for Advancing Translational Sciences which helped create the database, told Engadget. "This is the exact opposite of what we do for small molecule screening for pharmaceuticals. We don't want to find a hit, we want to say 'Hey, there's a likelihood for this [compound to be toxic].'"

When AIs aren't busy unraveling decade-old medical mysteries, they're helping to design a better flu vaccine. In 2019, researchers at Flinders University in Australia used an AI to "turbocharge" a common flu vaccine so that the body would produce higher concentrations of antibodies when exposed to it. Well, technically, the researchers didn't "use" an AI so much as turn it on and get out of its way as it designed a vaccine entirely on its own.

The team, led by Flinders University professor of medicine Nikolai Petrovsky, first built the AI Sam (Search Algorithm for Ligands). Why they didn't call it Sal is neither here nor there.

Sam is trained to differentiate between molecules that are effective against the flu from those that are not. The team then trained a second program to generate trillions of potential chemical compound structures and fed those back into Sam, which set about deciding whether or not they'd be effective. The team then took the top candidates and physically synthesized them. Subsequent animal trials confirmed that the augmented vaccine was more effective than its unimproved predecessor. Initial human trials started here in the US at the beginning of the year and are expected to last for about 12 months. Should the approval process go smoothly, the turbocharged vaccine could be publicly available within a couple years. Not bad for a vaccine that only took two years (rather than the normal 5 - 10) to develop.

While machine learning systems can sift through enormous datasets far faster than biological researchers and make accurate informed estimates with far more tenuous connections, humans will remain in the drug development loop for the foreseeable future. For one thing, who else is going to generate, collate, index, organize and label all of the training data needed to teach AIs what they're supposed to be looking for?

Even as machine learning systems become more competent, they're still vulnerable to producing sub-optimal results when using flawed or biased data, just like every other AI. "Many datasets used in medicine are derived from mostly white, North American and European populations," Dr. Charles Fisher, the founder and CEO of Unlearn.AI, wrote in November. "If a researcher applies machine learning to one of these datasets and discovers a biomarker to predict response to a therapy, there is no guarantee the biomarker will work well, if at all, in a more diverse population." To counter the skewing effects of data bias, Fisher advocates for "larger datasets, more sophisticated software, and more powerful computers."

Another essential component will be clean data, as Kebotix CEO Dr. Jill Becker explained to Engadget. Kebotix is a 2018 startup that employs AI in concert with robotics to design and develop exotic materials and chemicals.

"We have three data sources," she explained. "We have the capacity to generate our own data... think semi empirical calculations. We also have our own synthetic lab to generate data and then... use external data." This external data can come from either open or subscription journals as well as from patents and the company's research partners. But regardless of the source, "we spend a lot of time cleaning it," Becker noted.

"Making sure that the data has the proper associated metadata for these models is absolutely critical," Michael chimed in. "And it doesn't just happen, you have to put real effort into it. It's tough because it's expensive and it's time consuming."

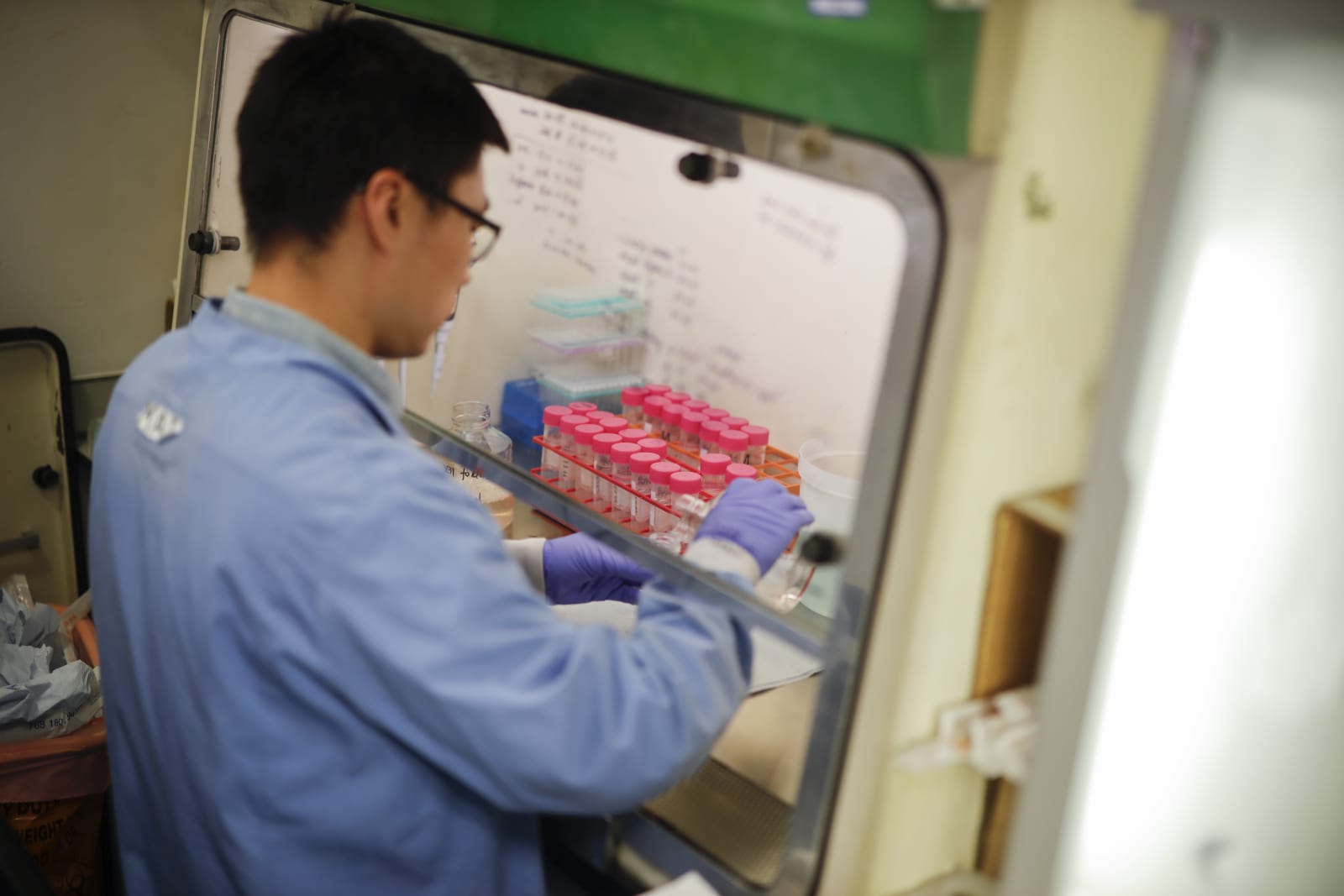

Image: TOLGA AKMEN via Getty Images (Lab technician)