Google's Latest ARCore API Needs Just One Camera For Depth Detection

by Shane McGlaun

Google has updated the ARCore platform for augmented reality that is offered on both Android and iOS devices. The new ARCore Depth API allows any phone to create a depth map using just a single RGB camera. The depth map is created by taking multiple images from different angles and comparing them as the user moves their phone.

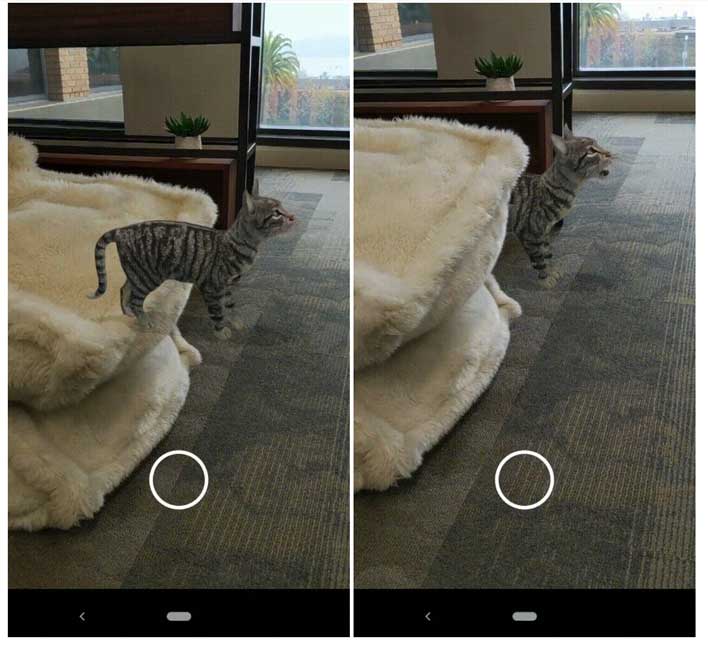

The image below gives a good example of how the new feature benefits AR users. The app being used called AR animals. In one image, the hind legs of the cat are standing on the furniture. The updated version treats the background as 3D with depth and puts the back legs of the cat behind the furniture as it would appear in the real world.

The more realistic AR function will be rolled out to some of the 200 million ARCore-enabled Android smartphones that have the Google app installed starting today. The single-lens approach for depth maps lowers the barrier of entry for smartphones by not requiring makers to integrate specialized cameras and sensors. However, the Depth API will improve with better hardware in phones.

Adding sensors like time-of-flight allows the addition of dynamic occlusion where objects can appear hidden behind moving objects. However, the new update means that Depth API will work on any device supporting ARCore. Google ARCore has been around for a while; it first launched in early 2018 when it exited beta for Android and iOS. At the time, Google said that ARCore was supported on over 100 million devices.