Google's new depth feature makes its AR experiences more realistic

Seeing virtual objects behind real-world ones makes them more lifelike.

by Nicole LeeGoogle has been experimenting with ARCore for the better part of two years, adding more features to its AR development platform over time. Back at I/O this year, Google introduced Environmental HDR, which brings real world lighting to AR objects and scenes. Today, it's incorporating a Depth API that will introduce occlusion, 3D understanding, and a new level of realism.

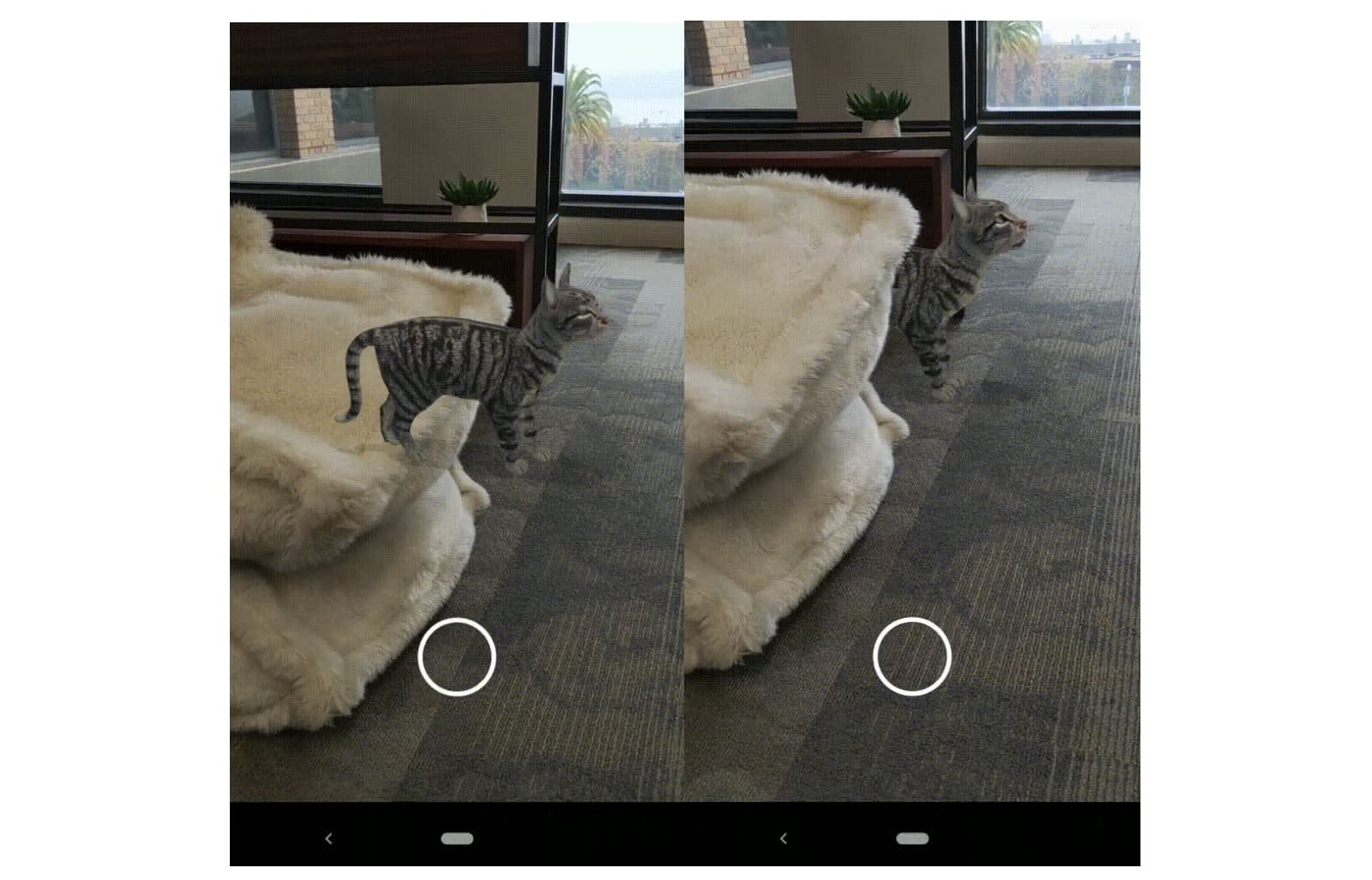

Google is first bringing this new Depth feature to search, which it introduced earlier this year with augmented reality animals. For example, if you search for the word "cat", you'll see an image of a 3D cat in its Google search card. Select "View in your space" and the app will access your camera, showing you the cat in the real world around you. To see the capabilities of ARCore's new Depth API, you can also then enable or disable occlusion. When disabled, the cat will float above the environment, but when enabled, the cat will be partially obscured behind furniture or other real-world objects.

On top of that, Google has also partnered with Houzz to incorporate this Depth API in its home design app. Starting today, Houzz users can see how this works with the "View in My Room 3D" experience. Now, instead of just placing furniture in the room, the new occlusion option will let you see a more realistic preview of how furniture will really look in a room. So, for example, you'll be able to see a chair behind the table, instead of floating on top of it.

In a demo at Google's San Francisco office, I saw how developers could use the new ARCore Depth API to create a depth map using a regular smartphone camera; red indicates closer areas while blue are for areas that are further in the distance. Also, in addition to occlusion, Google says that having this depth understanding of the world also allows developers to play with real-world physics, surface interaction and more.

One example that I tried out was when I threw virtual objects in a real-world living room setup. The digital objects respected the curvature and angles of the furniture, piling up in a narrow cavity or spreading all over the place in a wider, flatter space. Virtual robots climbed over real-world chairs, and virtual snow fell on each individual leaf on a real-world plant. One particularly fun demo was when playing a virtual food fight, where I could use real-world furniture as barricades.

According to Google, all of this is possible with the over 200 Android devices out there that are already ARCore-compatible. It doesn't require specialty sensors or equipment, though the addition of new sensors in future will likely make the tech even more lifelike.

Aside from Google's search and the new Houzz app, ARCore's Depth API isn't implemented in other consumer applications just yet. If developers are interested in trying this out in their own projects, they'll have to fill out this form to apply.